Privacy Manager

An extension to privacy-enhanced Android OS to help users make informed decisions based on granular controls centered around purposes of data collection

September 2019 - April 2020

UX Researcher

Surveys / Interviews / Participatory Design / Concept Tests / Security Design / Evaluative

Overview

New Update Open-source release slated to be on May 2020 🎉🎉

Sponsor DARPA

Story

Team Jason Hong (Project Lead) / Ritu Roychoudhury / Shan Wang / Mike Czapik / Bobby Zhang / Tianshi Li / CHIMPS

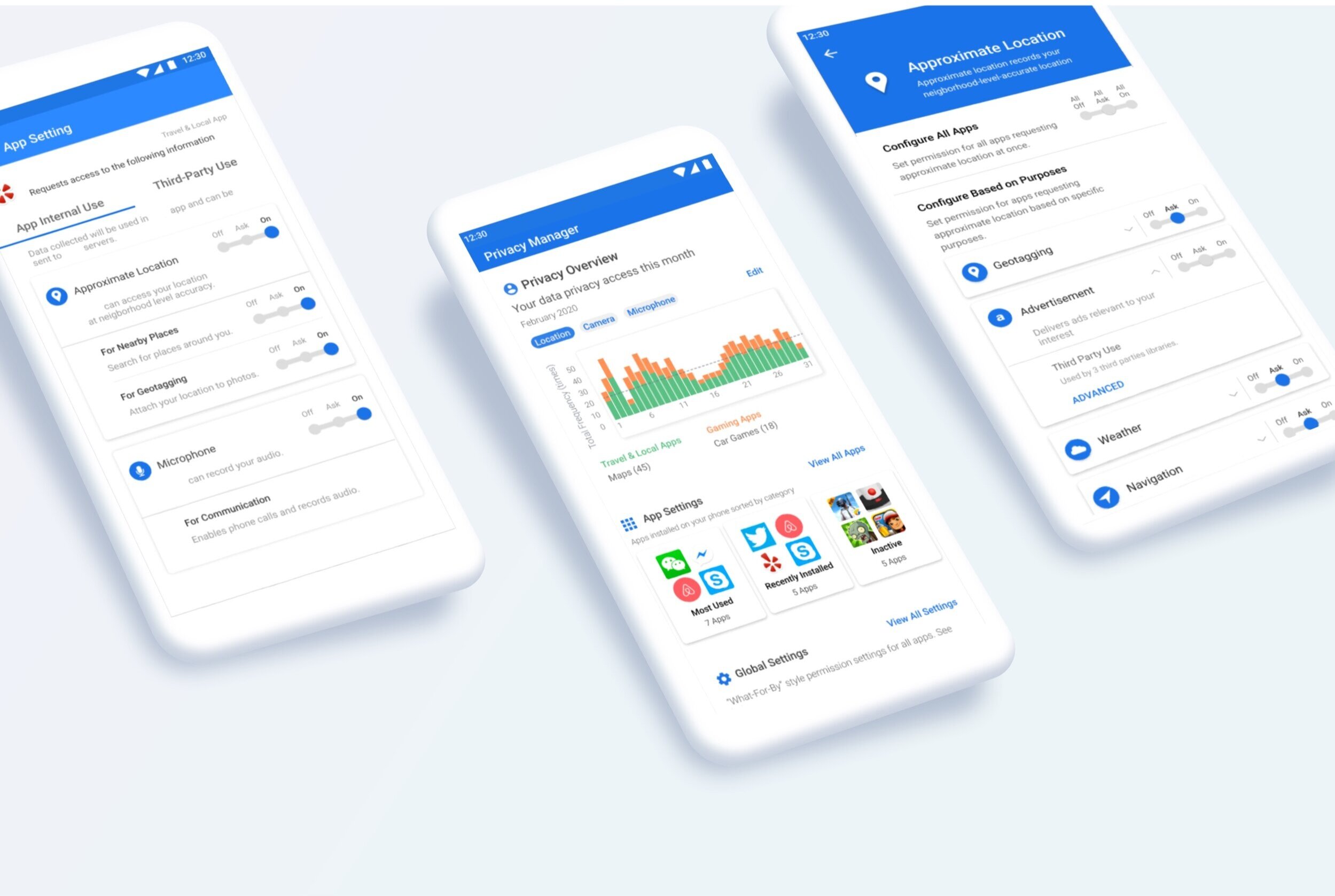

Privacy-Enhanced Android (PE Android) is an experimental operating system for researchers & developers to investigate new privacy-preserving technology. Privacy Manager is an extension to PE Android that allows users to control apps’ access to their personal information by exposing fine-grained privacy controls for people that are centered around “purposes”, giving people context as to why sensitive data are being requested.

As the lead researcher, my role is to design studies that address both user behavior and attitude, generate insights that simultaneously fuel ideation and validate designs, and collaborate with designers/developers to create and share research findings.

Research Impact Led research effort to pave the way for an entire redesign of global/app settings flow, discovered new design opportunities for building recommendations and privacy overview visualization through generative research, and aligned next priorities for design/development to ship design for implementation

Please note we have no affiliations with any of the app names mentioned in the UI

PROBLEM

Current problem space with Android 10

Current Android OS only uses permission-level descriptions to explain what the list of Android permissions means. These app permission requests only appear at runtime when users run an application and at install time when users install an Android app but do not provide context as to why certain app permissions are being requested or where the sensitive data is going.

As it currently stands, there is little support for users to understand the purpose of smartphone apps requesting access to sensitive data like location, camera, microphone, and other permissions.

RESEARCH PROCESS

Literature review and desktop research

To get initial read-on problems, I reviewed literature and performed desktop research to learn more about how this project began four years ago, focusing on the creation of purpose taxonomies and demo videos on development up-to-date. I reviewed previously conducted research done by other students.

Previous studies have dived into exploring what factors affect people's way of setting privacy preferences for smartphone apps and discovered that the understanding of why an app requests for sensitive data can have a significant influence on whether a user will grant permissions or not (Shih et al. 807). In developing new ways of inferring why mobile apps are collecting data, PrivacyGrade, a tool developed at Carnegie Mellon University CHIMPS lab, infers purposes of data collection by analyzing third-party libraries requesting for sensitive data.

SOLUTION

Privacy-Enhanced Android and Privacy Manager

To pave the way for new privacy-preserving technology, Privacy Manager has been designed and prototyped at CMU. Privacy Manager is an extension to Privacy-Enhanced Android (experimental operating system) that enables users to control apps’ access to sensitive data by providing granular privacy controls centered around purposes, explaining why data is being requested.

Privacy Manager

Privacy Manager provides a monthly or weekly view visualization of most frequently accessed permission data types based on different app categories.

It serves as a point of entry for app settings and global settings.

Recommendations for permission settings are provided so that users can use their previously configured settings and past behaviors as a way to inform their privacy decisions.

For more detailed design process, please refer to Shan's website.

App Settings

The app settings provide granular privacy controls for each permissions requested by the app grouped by app internal use and third party use.

It provides users more context for why sensitive data is being requested with specific purpose indicators. This will also appear when a user installs a new app.

Global Settings

The global settings enable users to control granular privacy controls across all apps at once.

The advanced option guides users to control access to third party libraries.

TIMELINE

Running in sprints

The timeline for this project was a little tight as we were preparing to go into design freeze in February so that we can ship design for implementation. During the first few weeks, I learned more about the smartphone privacy ecosystem and strategized next steps with the team to ramp up the speed of research. I devised a plan to have both design and research initiatives running in parallel by November.

Click to expand

PREVIOUS STATE

App Settings and Global Settings

The two primary flows behind Privacy Manager, including app settings and global settings, were a huge focus of my research. The app settings flow (displayed below) shows users the privacy settings for a particular app installed on the phone. The three levels of controls are based on:

1) Permission addressing the “what” (what type of data)

2) Purpose addressing the “why” (what reason for requesting the given permission)

3) Third-party use addressing the “where” (giving people granular control to configure data access to specific library types)

The uncommon and common categorization refers to whether the requested permission is common for the given app category or not.

Some parts of the UI where app names are mentioned are blurred because this project is not affiliated with any of those apps

The global settings flow (displayed below) is very similar to the app settings flow, except it serves as a central location where people can configure their privacy settings across all apps at once.

Click to expand

Identifying gaps

Previous usability tests were based on convenience sampling, which brought questions as to who our primary target audience was for this product. Also, there were no benchmarks for me to refer back to track improvements. Most importantly, based on brief findings from informal usability tests, it was clear that we needed to dive deeper into people’s understandability of the privacy policies in the app.

It was important for us to consider the behavioral attributes of people as well so that we can talk to people who would most likely use our product.

Reviewed data from previously conducted informal think aloud usability tests

Sampling and recruiting

Who do we want to talk to?

- Current Android users who care about privacy

- Used Android device for at least one year

- People who check phone privacy settings at least once a month

- At least 18 years old

- Mix of men and women

- Local to Pittsburgh, near CMU campus (for in-person studies)

- Range of educational backgrounds (technical, non-technical)

Writing out the screener survey and building two separate screeners to collect responses from both ends: MTurk workers and local Pittsburgh people (for in-person studies)

Recruiting via flyers posted near CMU neighboring areas / via Slack groups and local Privacy and Cybersecurity-oriented groups/associations

SURVEY

What do we want to know?

The team wanted to quantify the understandability issues that arose in previous research to allow for more precise decisions when supporting future design/development efforts. I used surveys because it’s a fast and relatively inexpensive method.

Our Goal:

To know how many Android users can understand the policy card at the app settings level

Finding the North Star:

- What percentage of respondents can understand why permissions are being requested?

- What percentage of respondents understand where their data is going?

Our Hypothesis:

At least 50% of the respondents will be able to identify what purposes the permissions are being requested for and at least 40% will understand where their data is being sent to because the purpose names are more visually apparent than third party names

Designing the survey

I made use of closed-ended, categorical questions on Google Forms so that I can more easily normalize and visualize the data.

- 12 questions total (not too many questions to consider response rate)

Sample of questions with embedded screenshots of the design:

- What does this permission allow the app to do?

- Where is your permission data going?

- Where is your permission data for this specific purpose going?

Screenshot of the sample of categorical questions

I released the survey through Mechanical Turk for global reach. We distributed the screening survey capped at 300 respondents. Of these 300, I sent the full survey to 100 qualified respondents (109 total qualified) and received 93 responses back.

Respondents struggle to identify why apps need access to a permission

Based on the visualized data on Tableau, I noticed trends and patterns:

- Only 30% of respondents correctly identified all purposes of why apps needed access to a given permission

- 12% of respondents actually chose an entirely incorrect combination of answers

Respondents find it difficult to understand where their data is being sent to when a purpose behind the requested permission isn't given

- Only 20% of respondents were able to correctly identify where their data is going when not knowing the purpose

- Interestingly, 55% of respondents correctly identified where their data is going when the purpose for the requested permission was given

PARTICIPATORY DESIGN ACTIVITIES

What do we want to know?

To fill in the gaps from survey results, I used participatory design methods with 5 participants in a one-on-one 1 hour session to better understand people’s needs, preferences, and how they prioritize certain information over others for future design opportunities. For all in-person studies, I created a separate screening survey to gather a pool of already screened participants and save time.

Our Goal:

To recognize where the gaps lie in people’s understanding of the relationships between permissions, purposes, and third party libraries at app settings and global settings level and better understand what people’s priorities are across all these different moving parts

Finding the North Star:

- What are the gaps in participants' understanding of permissions, purposes, and third party libraries?

- What are their priorities when making changes to their privacy settings at different granular levels?

Planning participatory design session

I split the participatory design activities into two parts:

1) Using pens and printouts of design mockups of app settings and global settings and progressively showing one design at a time, having participants take me through the designs and talk about what might come next so that I can better understand their expectations. I had participants freely mark up the printouts - highlighting confusing parts or helpful key parts.

2) Applying flexible modeling kit activity through artifacts to understand what participants prioritize across these different moving parts. I gave them paper UI components and blank pieces of paper to have them present their ideas physically.

Planning activities through collaborative Mural board. (Click to expand)

Participatory design activities in action

I included one designer when co-creating with the participant, and one developer and one designer in the note taking process. During the second part activity, I presented the previously marked up mockups as a point of reference for participants to refer to so that they can immediately recall what parts were helpful/not helpful while engaging in the creating process.

Participants freely marking up printouts of design mockups and engaging in the flexible modeling activity

Analyzing artifacts and data sources

During the analysis, I color-coded all my data across participants based on attitudinal and behavioral data captured through video recordings, raw notes, quotes, transcription, and artifacts/photographs.

Click to expand

Collaborative synthesis process

For the synthesis, I led affinity diagramming sessions with designers to think about the opportunities that emerged from the themes. 7 clusters emerged - having 2 opportunity clusters based on gaps we haven’t addressed yet, 3 need-based clusters based on how participants prioritize certain information over others, and 2 problem clusters based on pain points.

Click to expand

Pain points to address

From the synthesis, I identified key pain points to address:

- Learning curve for Privacy Manager is relatively high for all particpants due to lack of text descriptors for what each purpose name and third party library name means

- Missing context on how purposes and permissions are related has most participants asking for clarification

- Low discoverability of the collapse/expand menu has most of the participants fustrated because the ability to control access to permissions based on purpose wasn't clear

Design opportunities

Based on pain points and key user needs, I proposed design opportunities to help users feel more confident in making their privacy decisions:

- Consider increasing the discoverability of third party use controls

- Refer back to familiar Android UI design patterns to match users' expectations on seeing a list of apps under each purposes in global settings

- Consider placing more emphasis on purpose level controls separated based on internal and third party use control as most participants didn't find value in categorizing permissions based on whether it is uncommon or common for an app category

Kicking-off design sprint

This led us to generating design concepts for next iterations of app and global settings flow. I facilitated ideation sessions to brainstorm different design solutions that incorporate the key focus areas.

Invited developers/designers to include them in research process and help the team fluidly brainstorm ideas together based on participant-informed data

How Might We?

How Might We improve people's understanding of why their data is being accessed and where the data is going to?

How might we help people adopt this new way of controlling their privacy by helping them easily manage their settings for granular control?

Uncovering new questions

I realized that we know a lot about what people don’t understand about the designs, but the team has been lacking research on Android users’ needs.

- How are they currently configuring their privacy settings?

- What hacks are they using to get around limitations of the current experience?

SEMI-STRUCTURED INTERVIEWS

What are we hoping to uncover?

In answering these newly uncovered questions, I conducted semi-structured interviews with 7 participants in a one-on-one 1 hour session.

Our Goal:

- To understand people’s current practices of managing their phone privacy settings

- To learn how we can help people make more informed decisions based on their current practices, and where they feel there are challenges and opportunities

- To improve the existing product to better fit unmet needs

Finding the North Star:

- How do participants currently make decisions about their phone privacy settings?

- What are participants’ mental models about configuring phone privacy settings?

Planning interview guide

I planned for a two part interview:

1) Starting with open-ended questions to gauge into their current experiences and attitudes

2) Using picture cards as a way to provide a tangible anchor point for participants to use to help recall stories.

Sample of context questions about their experiences, attitudes, and problems:

- Describe what comes to your mind when you think of your phone privacy settings. Why do you think that?

- Walk me through a time when you faced a problem while configuring your phone privacy settings. How do you currently solve this problem?

Sample of questions for debriefing:

- If you have 3 wishes to make your experience of configuring phone privacy settings better, what would they be?

Snippet of interview guide and picture cards (Click to expand)

Interviews in action

After pilot sessions, I conducted interviews alongside two designers who engaged in the note taking process and observed the session first-hand to better understand the participants’ struggles.

Most participants directly showed their phone or used the picture cards when recalling stories

Analyzing interviews

During the analysis, I watched video recorded conversations to capture key quotes/moments and coded through transcribed data. These codes were clustered into themes: emotions/feelings, current ways of setting privacy, pain points/struggles, use of other information sources, and needs.

Coding the data and pulling out key quotes

I clustered the codes into themes so that I can discuss about emergent themes with the team.

Click to expand

Moments of surprise and struggle

"Okay, interesting...I didn’t realize that I had given permission to this!"

"Wish it can take a moment to remind you of previously set permissions to help me continually consent to it even after some time passes - I think I would then trust it even more"

"I just go to the app's features and figure out on my own why they would need particular permissions or even search through app reviews on Google"

Creating models to communicate research insights

To better communicate my findings, I created a model to highlight key mental model components like installing an app, granting and revoking permissions. This allowed the designers and developers to have a shared understanding of key design opportunities and start to build out concepts based on users' mental model.

Click to expand

Kick-off conversations

I wanted the team to start thinking - How can we help people adopt this new way of controlling their privacy by making people’s intentions the focal point of the design?

Brainstormed across different ideas ranging from recommendations based on expert advice or past behavior to visualizations based on what type of data is being accessed to which apps

How Might We?

How Might We enable people to be continually informed about when their data is accessed?

How Might We reduce cognitive load on people’s ends when they configure privacy settings?

CONCEPT TEST: APP AND GLOBAL SETTINGS

What are we hoping to uncover?

With the redesigns of app and global settings from last design sprint, I ran concept tests with 12 participants in a one-on-one 30 minutes session, having six participants be shown with two design concepts of app settings and other six participants be shown two design concepts of global settings using between-subjects design. To avoid order effects, within each group, three participants were first shown concept A and then B, whereas the other 3 participants were shown B and then A. In parallel, designers were designing new solutions to address the needs captured from interviews.

Our Goal:

- To validate the ways how we address people’s needs 1) Need to easily understand why their data is being accessed and where the data is going 2) Need to easily discover the granular controls at different levels

- To analyze what parts of each concept aligns with fulfilling these needs to confirm direction and use this new understanding for future iterations

Finding the North Star:

- Does this idea address the core needs for people? Why or why not?

- What do people expect and what could be better about this idea?

- Which part of each design concept appealed to them the most? What does it tell us about their mental model and preferences?

App Settings Design Concepts

The two design concepts for app settings are shown below. They have similar content but are rendered differently through different design patterns and intentions by providing the point of entries for controls at different places. I wrote the intent under each concept with the designers so that we can all be in alignment of what makes each concept unique and how it addresses user needs in different ways.

Original (left), revised (right), Click to expand

Changes

- Granular purpose-level controls and third party-level controls are presented earlier on to ensure higher discoverability and quicker access

- Text descriptors for all permissions, purposes, and third party libraries have been added

- Permissions are no longer categorized based on uncommon vs. common but now based on app internal use vs. third party use

Global Settings Design Concepts

The two design concepts for global settings are displayed below. Concept A highlights the ability to control across multiple apps collectively based on purposes and concept B leads to individual app settings from the global settings based on a list of apps requesting for the permission.

Original (left), revised (right), Click to expand

Changes

- Text descriptors for all permissions, purposes, and third party libraries have been added

- List of apps are shown below each purposes to align with familiar Android UI patterns

Planning Concept Tests

I planned for a 3 part concept test:

1) Checking in on the core problems/limitations that users face when configuring phone privacy settings before showing the concepts, and learning about their existing solutions

2) Briefly describing and showing the concept to seek reactions

3) Comparing the different parts of each design concepts

Sample of introductory questions:

- What are the current limitations that you experience when configuring your phone privacy settings?

- How do you currently go about solving this problem, if at all?

Sample of follow-up questions when introducing concepts:

Let’s say when you check your privacy settings on your phone, this is a rough idea that

appears and allows you to control your privacy settings for a particular app.

- You previously mentioned the problem about XYZ. How do you think this idea is helpful or not helpful in solving the limitations that you face? Why or why not?

Sample of questions for comparison between concepts:

- Which parts of each idea would you combine to create a new, better version? (Giving the freedom to draw on a blank paper)

Snippet of concept test protocol and paper design concepts (Click to expand)

Concept Tests in Action

I ran concept tests with one designer who took notes and recorded the session so that we can loop in other teammates who weren’t there to observe first-hand. I printed out the design concepts on paper so that participants can mark them up freely with a pen and move it around.

Analyzing Data from Concept Tests

During the analysis, I play backed recorded sessions to capture key quotes and collect thoughts on what participants didn’t understand or found confusing/not useful and what they preferred or matched with their expectations.

Playing back recorded sessions

I clustered the notes into high-level themes: emotions, pain points, opportunities, and thoughts/attitudes and supported with sub-themes of what works and what doesn’t work for each concept and of design opportunities for different parts of each concept.

Affinity mapping notes from app settings design concepts (Click to expand)

Affinity mapping notes from global settings design concepts (Click to expand)

Key Insights

App Settings Concept A

WHAT WE DID WELL:

- Most participants preferred segmented view of app internal use and third party use classification to avoid long scrolling

- Most participants finds it useful how all information is stored within a central location as a brief summary of what every app is collecting as it allows them to be more conscious and increases the transparency on where the data is going

“I really like that there’s a separate section for third party and internal use in [App A] because third party use is something I don’t understand really well and would like to have more control over”

“This is better than what I have right now with Android - useful in helping me understand what my apps can do and configure them in a way that makes me feel comfortable”

App Settings Concept B

WHAT WE DID WELL:

- Most participants prefers to see a list of permissions requested by each app as it helps them to narrow down into each permissions individually

- Some participants found it convenient in controlling all purposes under one permission together

“Having a list of permissions helps me to prioritize which permissions I want to manage or care more for”

“Not having to scroll to view permissions like in [App A] makes manual configuration easier in [App B] since permissions are all listed under one place”

DESIGN OPPORTUNITIES:

- Consider disclosing the purpose level control for advertisements first before disclosing all the individual third party library information as it may overwhelm the user without providing clear next steps

- Consider clearly communicating the different states of controls (when selected and unselected) and demonstrating the value of controls for multiple sub-options (purpose level) that are grouped under a parent option (permission level)

DESIGN OPPORTUNITIES:

- Avoid placing third party use controls at the bottom as it may lead to low discoverability for users to take advantage of

- Consider removing the two controls that allows for controlling individual purposes and all purposes as it takes about the same number of touchpoints

Highlighting which parts of the concepts participants found useful (Click to expand)

Key Insights

Global Settings Concept A

WHAT WE DID WELL:

- Most participants appreciated the grouping of different apps based on purposes as it gives them a shortlist of apps to focus on and allows them to think in terms of what the data is being used for rather than by the broad name of permission alone

“This allows me to learn about which apps are using my data for what purpose quickly”

“Gives a better standard in setting your privacy settings in case you mess up and give out data for odd purposes. In [Global B], you would have to check one by one, whereas this helps to guard against that mistake”

Global Settings Concept B

WHAT WE DID WELL:

- Most participants expressed that being able to customize individual app settings is preferrable

“Having individual app settings from global settings is useful because I want to selectively set a few differently”

“This is a great way of managing settings, allowing me to flexibly control for specific apps”

DESIGN OPPORTUNITIES:

- Provide flexibility to enable users to control settings on an app-by-app basis

- Consider clearly communicating with a visual indicator when override statuses occur - when users override the global settings by configuring individual app settings differently

DESIGN OPPORTUNITIES:

- Provide more flexibility to the users by allowing them to control across multiple apps globally but also allow them dive into individual app settings

- Consider enabling users to sort apps based on what the permission is being requested for so that they can quickly learn which apps are using data for what reason

Highlighting which parts of the concepts participants found useful (Click to expand)

CONCEPT TEST: GRAPHS AND RECOMMENDATIONS

What are we hoping to uncover?

With the same study design put into place with the same 12 participants in a one-on-one 30 minutes session, participants were shown design concepts of recommendations page and privacy overview graph. In parallel, designers were iterating on the app/global settings based on recommendations.

Our Goal:

- To validate the ways how we address people’s needs Need to be continually informed about when their data is being accessed and where the data is going 2) Need to reduce cognitive load on their end when configuring privacy settings

Finding the North Star:

- What do people expect and what could be better about this idea?

- Which part of each design concept appealed to them the most? What does it tell us about their mental model and preferences?

Privacy Overview Graph Design Concepts

The two design concepts for privacy overview graphs are shown below. They have similar content but are rendered differently through different design patterns and intentions by putting more focus on either app categories or data types.

Click to expand

Recommendation Page Design Concepts

The two design concepts for the recommendations page are displayed below. I actually helped prototype Concept A using Figma which highlights the ability to view apps that are collecting data in the background and view recommendations grounded on users’ previously configured settings for other apps in the same app category. Concept B focuses on quick access to revoking permissions across multiple apps based on inactivity or access to sensitive data types.

Click to expand

Concept Tests in Action

Analyzing Data from Concept Tests

I play backed recorded sessions to capture key quotes and collect thoughts on what participants didn’t understand or found confusing/not useful in the red bucket and what they preferred or found useful in the green bucket.

Miro board with affinity mapping

Key Insights

Graph Concept A

WHAT WE DID WELL:

- All participants expressed that viewing access to sensitive data types in a monthly view enabled them to learn more quickly across the board

- Most participants found that having different sensitive permission types to toggle between gave way for more flexibility

“Knowing how much data I have given to apps overall and what categories of data types have been accessed is really helpful”

“Being able to visualize access for each permission individually by just toggling from one type to another makes it really easy”

Graph Concept B

WHAT WE DID WELL:

- Some participants valued the greater emphasis placed on data types rather than on category of apps

“Being able to see access to my location and camera data together is a quick way to view a lot of information at once”

DESIGN OPPORTUNITIES:

- Consider providing a clear breakdown of individual apps within the app category that are frequently accessing the permission for actionability

- Give users the ability to control which sensitive permission types they can choose for added flexibility

DESIGN OPPORTUNITIES:

- Consider linking the ability to go into individual app settings from the privacy overview graph based on apps that frequently accessed particular data types

Highlighting which parts of the concepts participants found useful (Click to expand)

Key Insights

Recommendation Concept A

WHAT WE DID WELL:

- Most participants have expressed that their personal usage of apps being the main focus of how recommendations were formed was thoughtful as it sounds less biased

“Compared to just telling me what to do, I like how it’s based on my own usage of apps and not just general tips”

Recommendation Concept B

WHAT WE DID WELL:

- Some participants have appreciated the clean, organized chunks of information as it was clear on what next steps were when it comes to revoking permissions

DESIGN OPPORTUNITIES:

- Consider adding higher visual contrast between the proposed recommendation and reason behind it to reduce users’ initial effort in trying to learn this distinction

DESIGN OPPORTUNITIES:

- Avoid presenting recommendations that sound opinionated or biased as we want recommendation systems to be not tied to any particular apps or libraries

- Consider adding a feedback loop where users are able to view additional information on where the recommendation came from

“I'm usually am a fan of simplicity, but not so much when it comes to my own privacy”

Highlighting which parts of the concepts participants found useful (Click to expand)

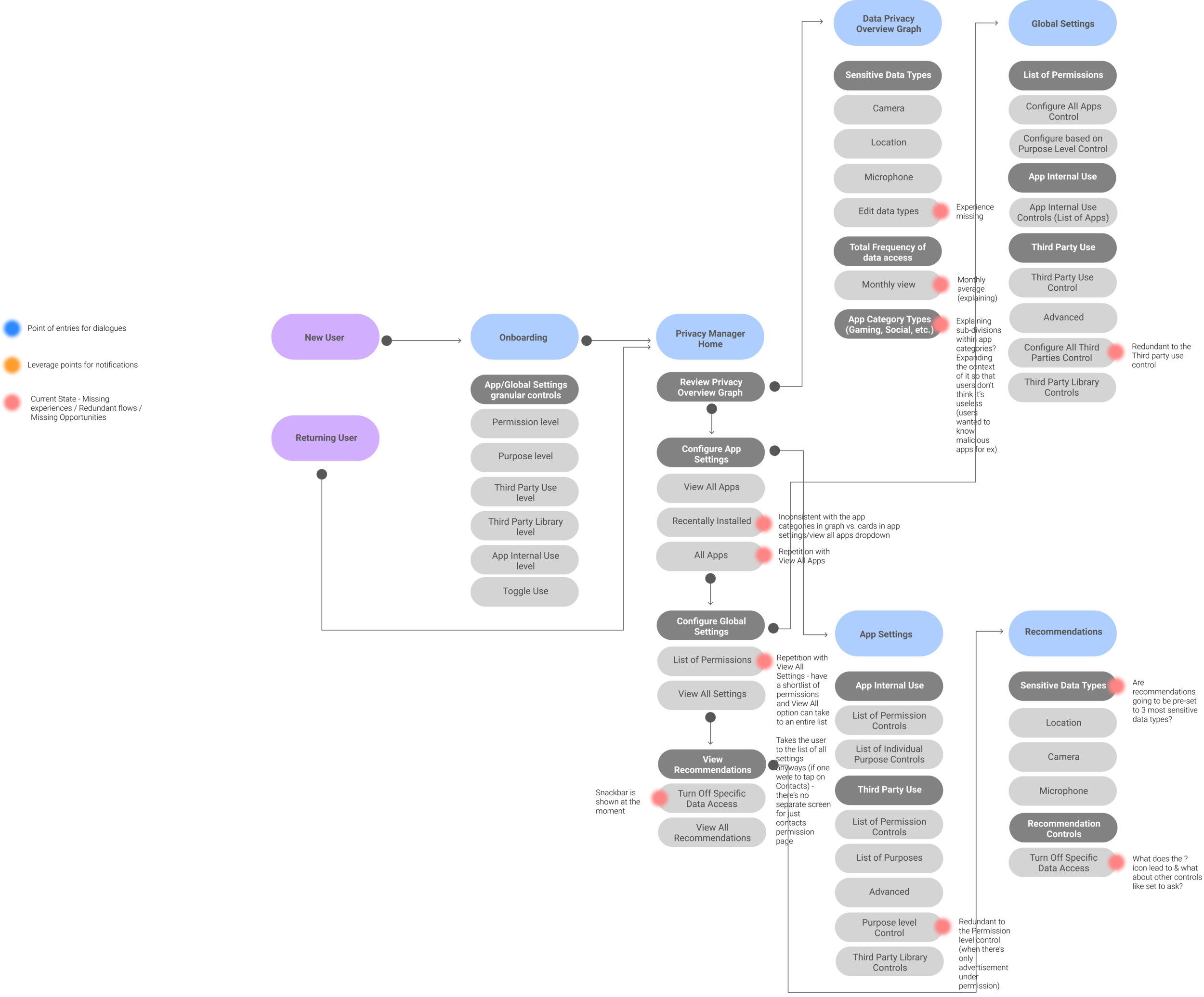

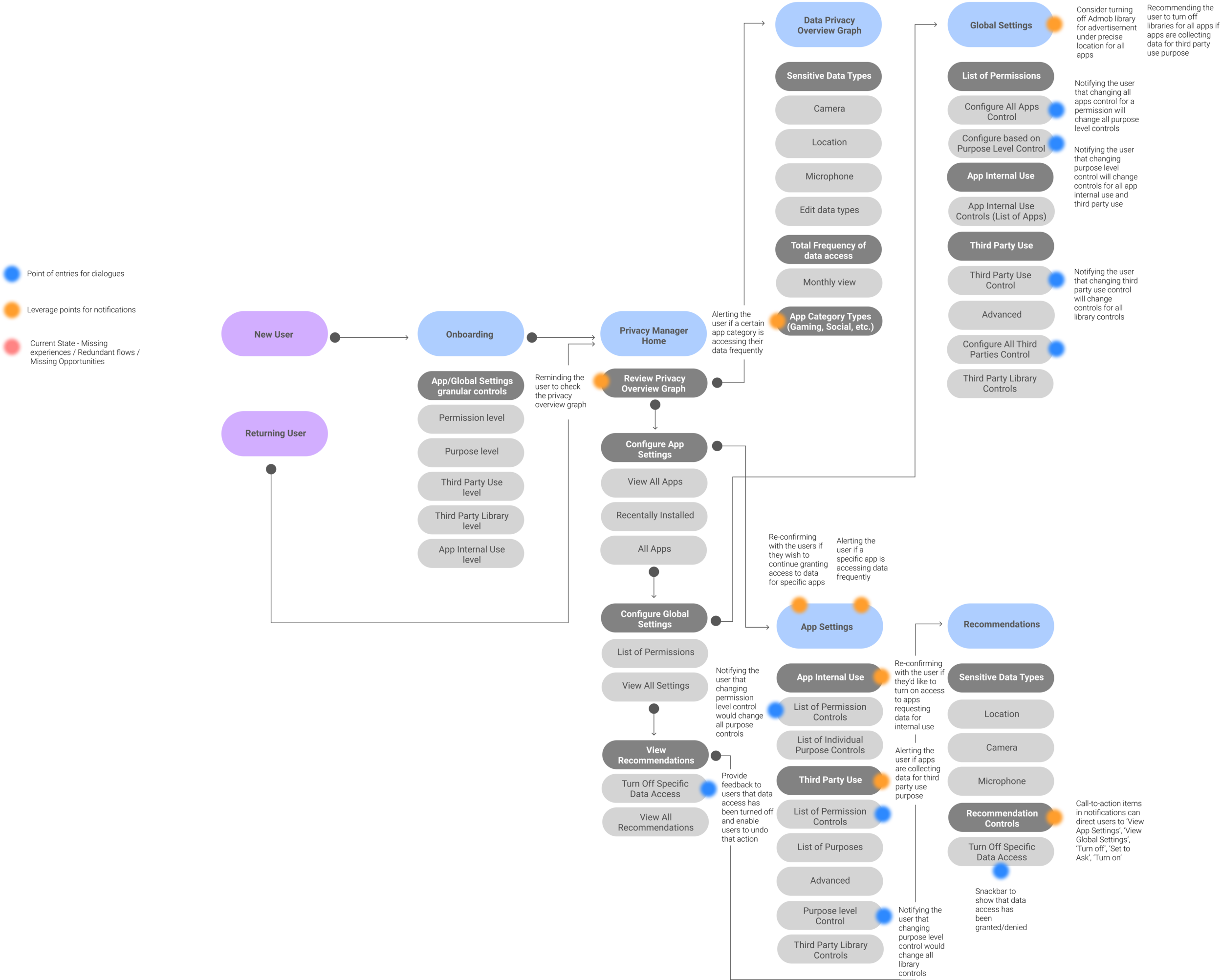

Creating Flow Diagrams

While designers are iterating on the designs from previous concept tests, I created flow diagrams to identify missing experiences and opportunities based on current state of flows. Based on interviews and concept tests, I noticed that most participants expressed the desire to be notified when sensitive data are accessed and when these permissions are left unchecked. I also wanted to plan out for cases when users choose to override manually configured multiple sub-options (purpose level controls) by controlling a parent option (permission level control). By leveraging heuristics like helping users to recover from errors and supporting recognition over recall, I mapped out possible points of entries for notifications and dialogs to surface and reflected these points onto the flow diagram so that I can use it as a boundary artifact when discussing new avenues for design opportunities with designers/developers.

Usability Testing with Prototype on Figma

Based on recommendations, the designers have incorporated the feedback into the Figma prototype for usability testing with users. We used the Figma Mirror app as a way to preview the prototype live on a Google Pixel 2 XL device.

Moderated In-Person Usability Test

What are we hoping to uncover?

After supporting the team with generative research to fuel ideation for redesigns and untapped design opportunities, I headed into evaluative research through moderated in-person usability tests with 5 participants in a one-on-one 1 hour session.

Our Goal:

- To evaluate whether users understand why certain permissions are being accessed and know how to control for third party use in global settings and individual app settings

- To identify barriers while participants go through the main tasks

- To evaluate whether users can easily understand the information shown on graph and recommendations

Finding the North Star:

- How difficult or easy is it to complete key actions on Privacy Manager?

- What parts of the flow make users confused or stuck?

- What parts of each feature component worked well or didn’t work well for users and why?

Determining what UX metrics to use to achieve the goals

Observational metrics:

- Time-on-task

- Task success rate

- Error rate

Post-test session questionnaires with ratings and multiple choice:

- Ease of use and perceived difficulty (following each task with evaluative questions facilitated through 7 point scale)

- User satisfaction rating (5 point scale)

- NPS

- UMUX-LITE (shortened version of SUS)

Aligning what success means for the team

Task-level:

- All tasks should take no longer than 3 minutes

- All tasks should have at least 70% success rate

- Error rate should be at most 25% for all tasks

- Average ease of use should be at least 5 for all tasks

Test-level:

- Satisfaction ratings across all users should be at least a 4

- Average NPS score should be at least 0

- Converted average SUS score should be at least 68 (average)

Our Hypothesis:

- Users will find it easy to understand when controlling for internal/third party use in global settings and individual app settings. We will have demonstrated this if our tasks for app settings and global settings flow meet the task-level success criteria above

- Users will be satisfied with the experience and find Privacy Manager useful and easy to use. We will have demonstrated this if our test-level metrics meet the criteria above.

Planning for Moderated Usability Tests

Creating realistic scenarios:

You come across a new software update on your Android and notice a change in your phone privacy settings labeled as ‘Privacy Manager’.

Sample of goal-oriented tasks:

1) You want to know how often your camera was accessed yesterday. How would you go about doing that?

2) You recently downloaded XYZ app and want to stop third parties from accessing your approximate location to show you targeted ads based on location. How would you do that?

3) You now want to make sure that all apps will stop accessing your approximate location for advertisement. Find out which apps are accessing your approximate location for ads and stop them from doing so.

Planned and designed facilitator script, SEQ questionnaire, post-session questionnaire, notetaker template, consent forms (IRB-approved)

Moderated Usability Tests in Action

Two designers and one developer sat in on sessions and took part in note taking and controlling Figma Mirror when needed.

Snapshot @ the meeting room with tripod, webcam (for recording participants’ face and body language), iPhone camera (for recording hand movements and screen interactions)

Caption: capturing hand movements and touch points

Analyzing data from Usability Tests

I play backed recorded sessions and captured notes on spreadsheets with columns like observed tasks, where the issue occured, description of issue or key quotes, persistence/impact/frequency of issues, and screenshots of participants’ key gestures/facial expressions. Each team member who attended the sessions read through his or her notes and cross referenced with my notes on the spreadsheet.

For issues, I’ve determined high to low severity issues calculated based on persistence, impact, and frequency of issues so that high severity issues can be prioritized. Color-coded based on participant ID (Click to expand)

Documenting observational metrics across all tasks (Click to expand)

Tracking metrics

Click to expand on the graphs

Our average NPS score came out to be 20, which was 10% more than what we expected!

"I'd be happy using this app because I'd have knowledge on why my data is being shared and I'd go explore and see what's in it"

“Before using this app, I didn't know I have categories like geotagging or some other things that they use in location settings specifically, but after this app, I'd be more conscious that I have the control or power to change these particular things”

Our converted average SUS score came out to be 73.82 on the 68th percentile, which was 5.82% more than what we expected!

Pairing quantitative data with qualitative data to get the full view

Key Pain Points

App settings:

- Few participants are still struggling to understand what the difference between internal use and third party use is initially due to third party names

"I didn't understand what does app internal use and third party use mean at first"

Global settings:

- Most users ended up having to manually check for particular permissions (location) under each of the apps individually, which they found annoying, as they thought they were unable to control across multiple apps at once

- Few users were confused about the controls as there weren’t enough indication to show that they can set the controls for them to act on

- Some users are surprised to see a list of apps under purposes as they’re accustomed to the current Android patterns and failed to recognize that they’re able to access individual app settings from the global settings page

“I was expecting to show me a general list of apps. This isn't what I was expecting to see so I'm just going to go back to home and find the appropriate settings"

Recommendation:

- Few users wanted to see recommendations linked with trusted external sources as they seek for more information on how to protect their privacy and feel like they lack knowledge to do so

Graph:

- Most users had difficulty with finding where frequently accessed data is - they had no idea that the graph served for that purpose initially

"There are so many things on the screen. The first time I saw it, I didn't know where to go"

- Most users expected to see more in-depth information by tapping into individual bar graphs as the graph was not sufficient to view frequency access in detail

“I just want to go into detail of this camera setting but I can't seem to find a way to just look out for more detail"

“If I select the app category, I should see the list of all the gaming apps that use my camera. There should be ways to find detailed status of camera usage so I'd want to see that"

Printed out the spreadsheet cells and clustered these notes to pull out key insights

Design Recommendations

Distilled findings into design recommendations. Based on suggestions, key design changes have been made. Final design prototype link here

App settings:

- Provide a more accessible point of entry for users to access third party library controls by replacing the advanced button as this made some users feel as if it was unapproachable/not relevant for them

- Consider using helper text that directs the user’s attention to the granular controls, guiding their attention to the controls of off/ask/on and communicating the value of what “ask” does

- Enable users to search against multiple criteria in the app menu by promoting consistent categorization of apps across both app settings and graph by using app categories shown on graph

Final design iteration for app settings based on recommendations (Click to expand)

Global settings:

- Consider increasing point of entries to global settings as some users were expecting to find this capability from the list of apps in app menu and want the flexibility to control across all apps without having to go into each app individually

- Support the journey of first-time use for new users who are often unsure on how to control permissions across all apps by leveraging an onboarding flow that exposes them to different features

- Consider clearly communicating the value of override status when individual apps have different settings than what is globally applied across all apps.

- Give clear feedback to users on what actions they can take by having a visual indicator of 1) what the user can expand to and giving 2) a clear indication of where the user is when they tap into individual app settings to avoid confusion

Final design iteration for global settings based on recommendations (Click to expand)

Recommendation:

- Guide users with resources that they can refer to so that they can learn more about how to protect their data (like referring to PrivacyGrade)

Final design iteration for recommendations based on suggestions (Click to expand)

Graph:

- Provide users the flexibility to toggle between monthly and weekly view so that the graph is more readable and less cluttered

- Provide more clarity on what the list of apps shown below the graph are, as some users were unsure whether those were a comprehensive list of all apps that used their data or a shortlist of apps, and enable users to directly control for these apps

- Leverage the list of apps shown below the graph when users tap into individual bar graphs to provide them a more in-depth layer of information as most users wanted to see more information on demand after viewing the first layer of information (frequency of data access) and know what classifies as an unusual activity

“I want concise information about unusual activity”

Final design iteration for graph based on recommendations (Click to expand)